If you’ve been following anything in the Cloud Native space right now, chances are that you’ve heard of WebAssembly (Wasm). As someone who works at a Wasm company, it should come as no surprise that I think Wasm is the future of software development. But, let’s be honest, you probably aren’t going to just dismiss Kubernetes and go all-in on the first Wasm-related project you find.

At Cosmonic, we’ve always believed it important that Wasm and wasmCloud (the soon-to-be incubating CNCF project we contribute to and help maintain) are compatible with, but not dependent on any pre-existing technology. Guided by that principle, we have long provided integrations with Kubernetes, as most people operating in the cloud native ecosystem are running in or integrated with it. What has been interesting to see is how people are choosing to integrate with it. This post outlines a couple of ways to integrate Wasm with Kubernetes, and it gives a clue as to why we’ve designed our platform to integrate with Kubernetes the way it does. With that in mind, let’s dive in!

Wasm is different from containers

I know the title of this section sounds self-evident, but it is important to call out Wasm is different from containers. This is a key point that sometimes gets lost in Kubernetes-land. Having been part of the Kubernetes community and related projects since 2016, I know that it is really easy to have a Kubernetes-shaped hammer and see everything as a nail.

Much has been written about the benefits of Wasm, but to briefly summarize, here are some of the key benefits and differentiators:

- Small size: Wasm binaries are generally at least half the size (if not even smaller) than an equivalent application in an optimized container. This can greatly decrease download times when starting.

- Cross-platform and language: Any language that can compile to Wasm (more languages are adding support as time goes on) can then run on any architecture and operating system. It is the closest thing to “write once, run anywhere.” We like to pretend that containers run everywhere, but if we’re being honest with ourselves, they don’t. You have to compile a different image per architecture and things like Windows containers work entirely different from Linux containers.

- Sandboxed by default: Wasm does not use cgroups or other container primitives. It must be explicitly granted permission to do, well, pretty much anything. This means that you can run lots of (possibly untrusted) code all on a single machine and often within a single process.

- Fast startup: Because it is so small and lightweight, Wasm starts extremely quickly, which means it effectively solves the cold start problem.

But that is just plain Wasm! Beyond that is another W3C standard called the component model that makes Wasm even more powerful and more different from containers.

The key thing to understand about the component model is how it enables what we’ve called “interface driven development.” Dependencies are now expressed through a defined interface, and then anything that implements that interface can be used to satisfy that dependency (think about it being an interface to a datastore like a KV database). That means developers can just write their code and dependencies can be added later. Also, because Wasm can be built from various languages, you could have your business logic written in Go and your dependency could be written in Rust! Ultimately, components enable all sorts of new platforms and runtimes. Obviously, wasmCloud is the one we use, because it enables heterogeneous compute to run your code seamlessly across clouds, operating systems and platforms, but there are many others out there.

That is enough background information for now, but I’d highly recommend giving Luke Wagner’s talk on the component model a listen to learn even more!

Integrating with and extending Kubernetes

One of the things that has made Kubernetes so popular is its extensibility. For better or for worse, you can extend Kubernetes at almost any layer. By far the most common and, more importantly, the most “standardized” (I use that term loosely) way to extend a Kubernetes cluster is through the use of custom resource definitions (CRDs). I’ve had a love/hate relationship with CRDs and, personally, think they are overused (they have inherent flaws that make them hard to manage). Regardless of how I feel about them, almost everyone has processes on how to install and manage CRDs inside of their business. For all intents and purposes, they are the integration point into Kubernetes for 80-90% of the user base. You want to add a new feature or integrate with an existing tool or service? You’d better provide a CRD and a controller/operator. Here at Cosmonic, we recently updated our Kubernetes integration to use an operator precisely for this reason, and we’re likely to contribute an operator back to wasmCloud as well to replace the current Helm chart.

So, the second key concept to understand and keep in mind is that most cluster operators (SREs and Platform Engineers) expect to work with CRDs to add functionality to and leverage other technology from Kubernetes.

Alongside vs Wrapped

You’re probably thinking at this point “wow Taylor, those are a lot of words to get to your point,” but you’ll see why those points are so critical here. To review:

- Wasm and the Component Model enable powerful new ways (often fundamentally different from containers) to build and manage applications.

- The most common and expected integration point for Kubernetes is via CRDs and controllers/operators.

To follow up, I want to say something that may feel a little stupid, but is critical to understand: Kubernetes is for containers. It isn’t for anything else. I have built Kubernetes platforms at three large Fortune 100 companies, including work on AKS, and working with customers of BigCorps I was a part of. I was a Helm maintainer for years, working on large chunks of Helm 2 and Helm 3. I was one of the co-creators of Krustlet (which was preceded by Wok). I say all this not to brag, but to point out that, at this point, I know Kubernetes expects everything to look and act like a container – full stop.

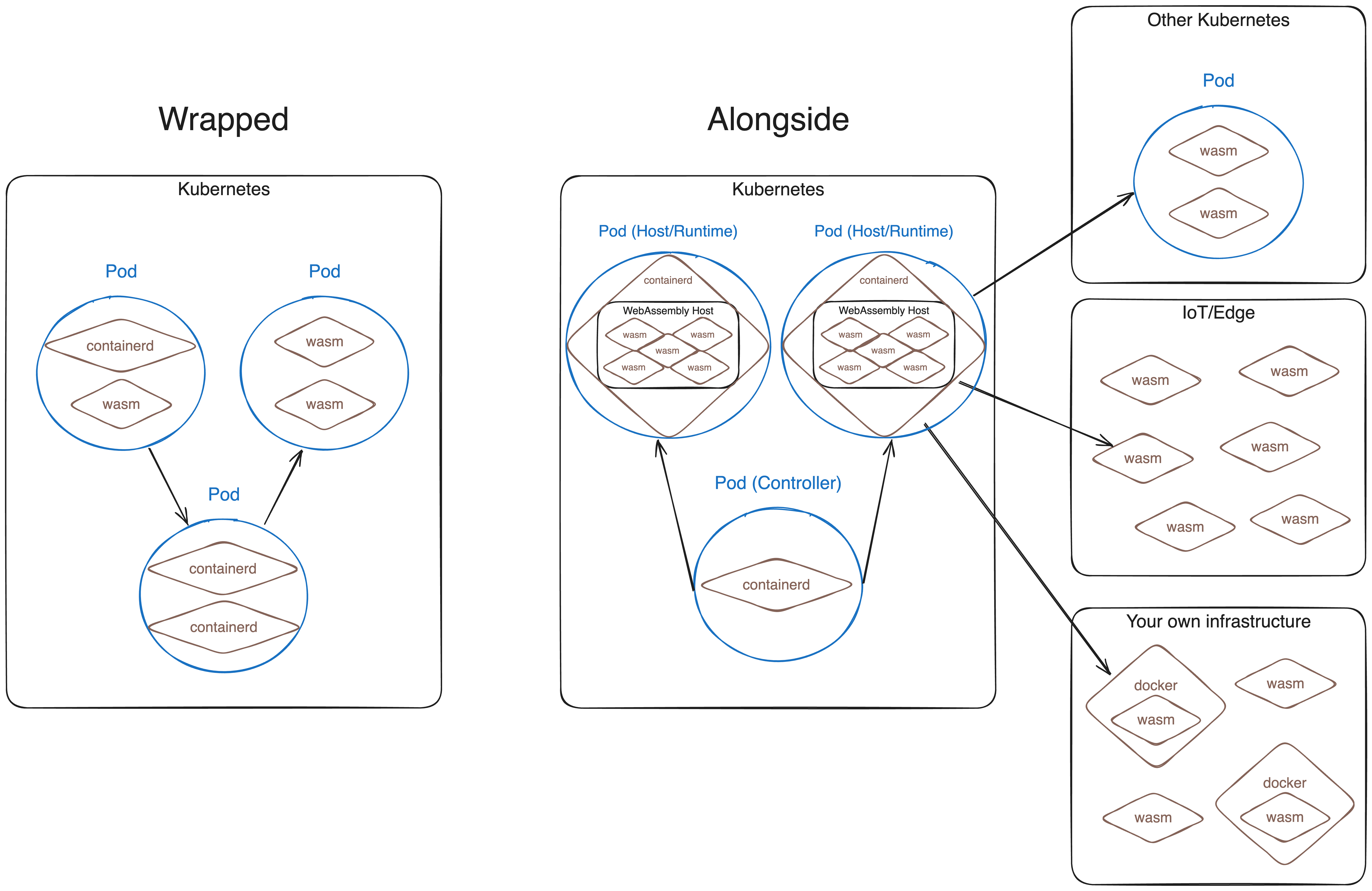

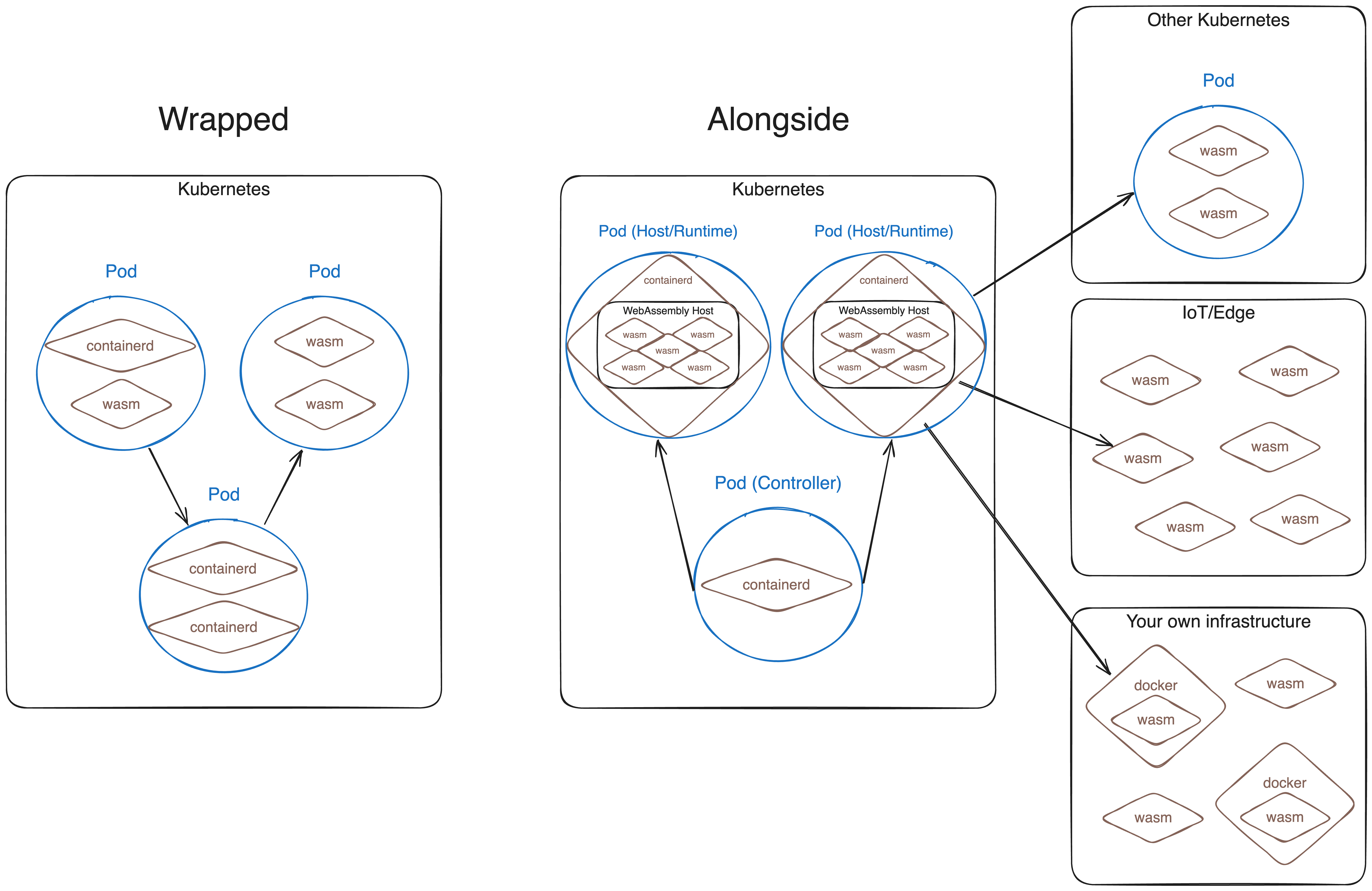

If Kubernetes expects things to look like a container, why would we want to hamper the full power of Wasm by constraining it to fit into a container-centric box? This brings me to the two different ways Wasm can work with Kubernetes. The first is “Wrapped,” meaning that we wrap Wasm to look and act like a container. The most obvious, polished, and fully featured example of this is Runwasi. The second is “Alongside,” meaning that you integrate in with Kubernetes, but as a separate “service.” Obviously, this looks like the controller+CRD model discussed above.

To be clear, I have nothing bad to say about Runwasi! It is a great project with some great people behind it. If you are looking for a way to run your first Wasm component in an ecosystem that is familiar, or just want a smaller, faster, more portable “container,” then Runwasi is a great fit. But the future of Wasm is so much more than that.

The inherent weakness of wrapping Wasm in Kubernetes is that it only focuses on the aforementioned key benefits of Wasm, while ignoring the new platforms, runtimes, and technologies that the component model enables. For example, we wrote a tool called Wadm that allows for declarative application management where the pieces of the application are just Wasm components. There is no way to express these kinds of relationships within the bounds of Kubernetes because, once again, it expects everything to look and act like a container. For a more in depth dive on why we built this and why new platforms built on Wasm won’t fit neatly inside of Kubernetes, check out the talk we gave recently at Cloud Native Wasm Day.

Also, wrapping expects people to integrate with Kubernetes at the node level, where you need to build a custom image and/or have some sort of admin access to enable Wasm for your developers. As stated above, this is not the way people expect to be extending Kubernetes, they expect it to be alongside the things running inside of Kubernetes. Using this “alongside” model means it is really easy to tie these things directly into existing pipelines and tools like ArgoCD (we recently released a demo showing how this all works, and it is cool).

To be clear, this doesn’t mean this is an “either/or” decision. I imagine there are some advanced use cases where we would want to run Wasm and containers within the same pod, and that would work simultaneously having integrated other Wasm tools via CRDs and controllers. What I do mean, though, is that we believe the future of integration with Wasm plus the component model, and all its benefits, will be primarily be realized by running things alongside and not solely wrapped by Kubernetes.

Conclusion

This is far from all the information we could share on this topic, but we wanted to be clear and state where we think the future of Kubernetes and Wasm is going and why. If you take anything away from this post, it is that Wasm and the component model have enabled incredible new ways to build platforms. These new platforms and Kubernetes can complement each other very well but, arguably, only if the focus is running alongside and not wrapped by it.