What is WebAssembly?

WebAssembly is not ‘new containers’ but it does have a bunch of the same benefits. It is different in a number of ways and looks back to some lessons learned from other developer technologies over the years (think SpringBoot, JVMs…).

First and foremost, WebAssembly (Wasm) is a way to bundle and, in turn, run code ‘somewhere’. That ‘somewhere’ is in a Wasm runtime. A few years ago, this was just in your web browser but now, with modern runtimes, our bundled code (Wasm modules) can run on a server, in any cloud or a Raspberry Pi — on any embedded device for that matter.

But how!? To start, let’s do a quick refresher on how the running of applications has changed, starting with just running on a simple application on a normal Linux box. An app on a normal machine talks to the machine via the kernel, this provides access to resources from files on the disk, through to CPU cycles, to compute answers. All apps share the underlying resources and have access to the same things on the machine. Good if everyone can play nice, bad if someone is greedy or needs a different dependency. This is also terrible for security and process isolation. We used VMs to split out apps onto a ‘computer each’ to solve these security and isolation concerns, but that just meant we had lots of computers to look after.

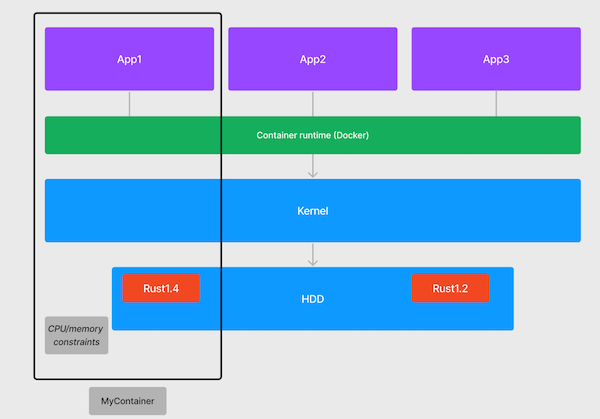

Next came along containers. Using the magic of the Linux system (C-Groups and namespaces) containers enabled us to isolate a single process from everything else, including a closed off file system that only that app (process) can access. This means that my application’s dependencies can be bundled with it and installed ‘for it alone’; not conflicting, just managed, updated and secured for my app.

The ‘bundle of files’ is what we create when we run a Docker build then, the magic of Docker lets us pipe an isolated running process and files together. In this model, when you are compiling a node app, you are still building a node binary for Linux. Then, to make this work, as part of the ‘bundle of files’ for my container, we include all the other bits of node that it needs to run. Along with this, my application itself has all the required bits, like how to communicate to a web server, a message queue etc. This all fits into my one big final bundle.

The ‘bundle of files’ is what we create when we run a Docker build then, the magic of Docker lets us pipe an isolated running process and files together. In this model, when you are compiling a node app, you are still building a node binary for Linux. Then, to make this work, as part of the ‘bundle of files’ for my container, we include all the other bits of node that it needs to run. Along with this, my application itself has all the required bits, like how to communicate to a web server, a message queue etc. This all fits into my one big final bundle.

This makes our container a complete closed loop, but big and a point-in-time snapshot of a lot of things.

So where does Wasm fit?

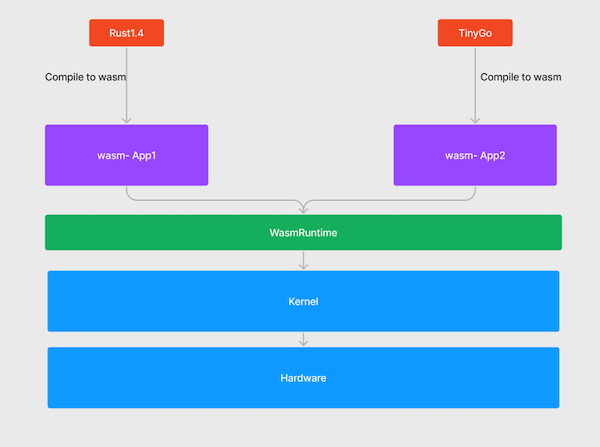

Wasm is not a ‘bundling’ technology like Docker, instead, it is a compile target. That means language dependencies are (somewhat, we will come back to this) removed and, instead, we are left with just a binary. This binary then runs on a Wasm runtime.

This is cool as it means that we are, essentially, removing our run time dependency coupling between our binary and the language & OS it was built from, so we can now ship just the binary. And with Wasm we still have a memory-safe, high performance, OS and platform-agnostic binary we can run anywhere, just like containers! Removing all this bundling also makes these binaries tiny, up to 200 times smaller than a container.

This is cool as it means that we are, essentially, removing our run time dependency coupling between our binary and the language & OS it was built from, so we can now ship just the binary. And with Wasm we still have a memory-safe, high performance, OS and platform-agnostic binary we can run anywhere, just like containers! Removing all this bundling also makes these binaries tiny, up to 200 times smaller than a container.

But… this isn’t a real example? Who has a Docker file that is just the language that actually makes it out into production? In reality, these are often vast beasts (as are the apps behind them) with tons of different ‘bits’ that come together to enable this app to work, from logging to HTTP servers. We can look at something like the Mastodon Dockerfile mastodon/Dockerfile at main · mastodon/mastodon as a great example of what we mean.

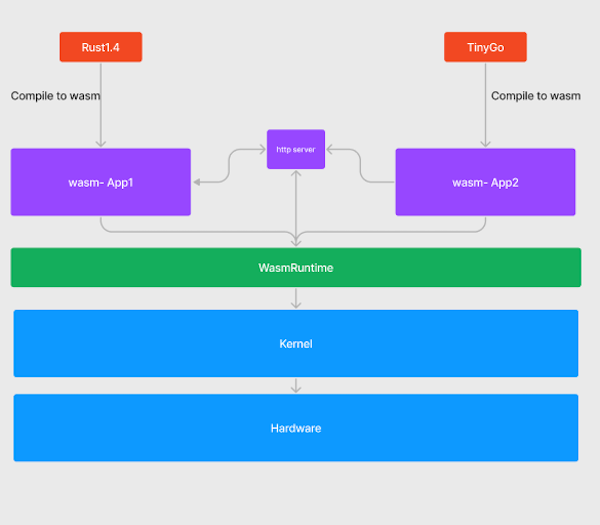

So, what happens with the rest of these dependencies that were bundled with Docker, things that we include time and again like an HTTP server, logging and messaging. In Docker these are still build time dependencies, in Wasm components these become runtime dependencies through the power of contracts. This really means we are still taking a dependency on a known way of communicating/working with a component. But we aren’t including it in our app explicitly as a binary, we just expect it to be there and work the way we expect.

This is very cool because it means that we can:

- Share these dependencies to increase density

- Swap them out easily to update them to address security issues

- Have less repetitive code in our applications, relying on these at runtime through our contracts

- This means less stuff to support and less time writing it again and again and again…

Imagine if fixing Log4j had been as simple as swapping the ‘Log4j provider’, that scaled and everyone relied on, in one place and all in one go. That is the power of runtime rather than build time dependencies.

So what does this actually look like? A bit like this… but it's still super simple..