By enabling cloud native back ends to run on operational edges, Wasm allows us to deploy business logic closer to users or data, even to places Kubernetes and containers can't go.

This article first appeared in InfoWorld as part of the New Tech Forum.

Over the last decade, we've witnessed organizations of all sizes adopt containers to power the great "lift and shift" to the cloud. Looking ahead to the next decade, we see a new trend emerging, one dominated by distributed operations. Organizations are struggling to meet their goals for performance, regulation compliance, autonomy, privacy, cost, and security, all while attempting to take intelligence from data centers to the edge.

How, then, can engineers optimize their development methodologies to smooth the journey to the edge? The answer could lie with the WebAssembly (Wasm) Component Model. As developers begin creating Wasm component libraries, they will come to think of them as the world's largest crate of LEGOs. In the past, we brought our data to our compute. Now we are entering an age where we take our compute to our data.

What do we mean by 'the edge'?

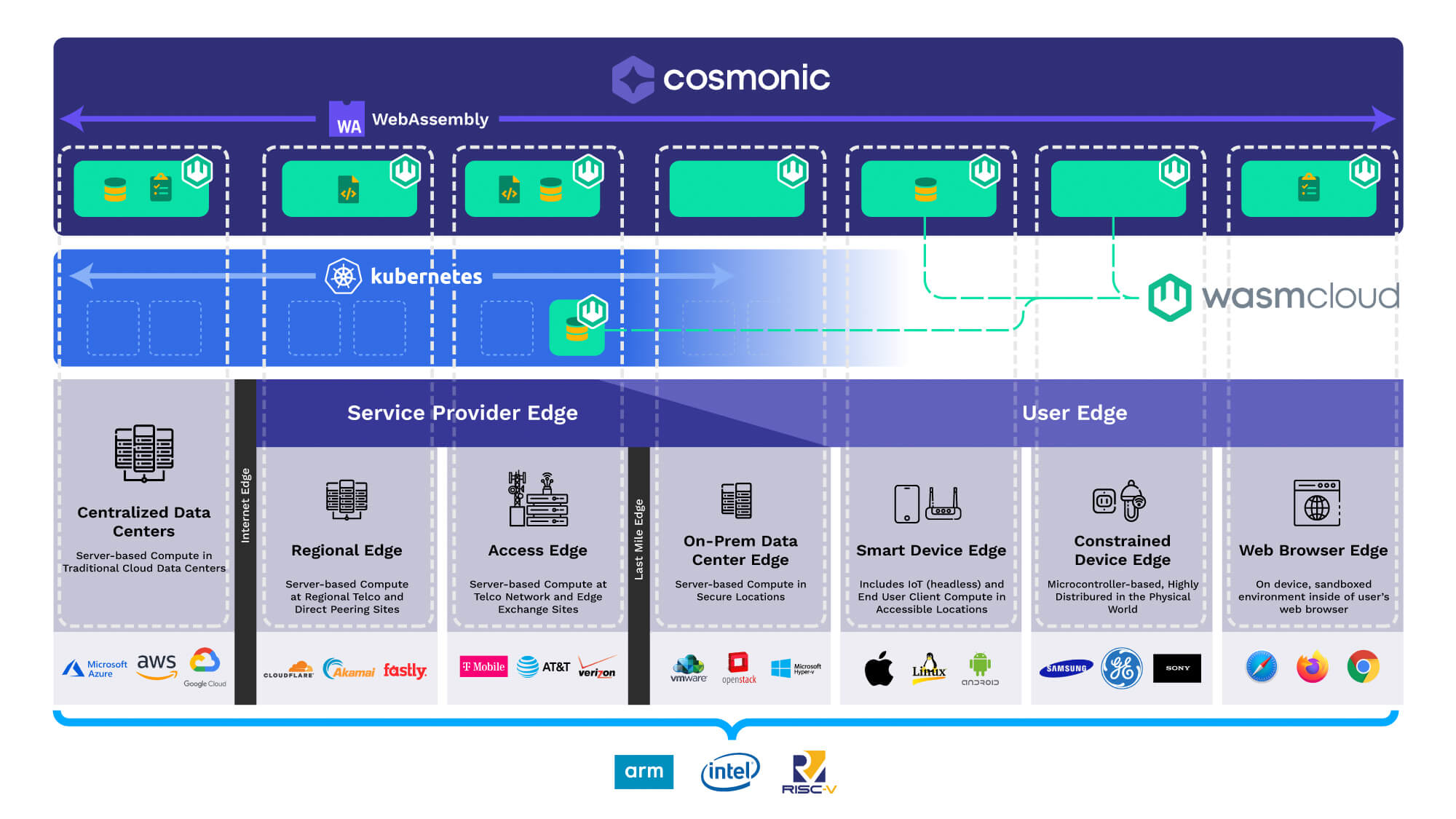

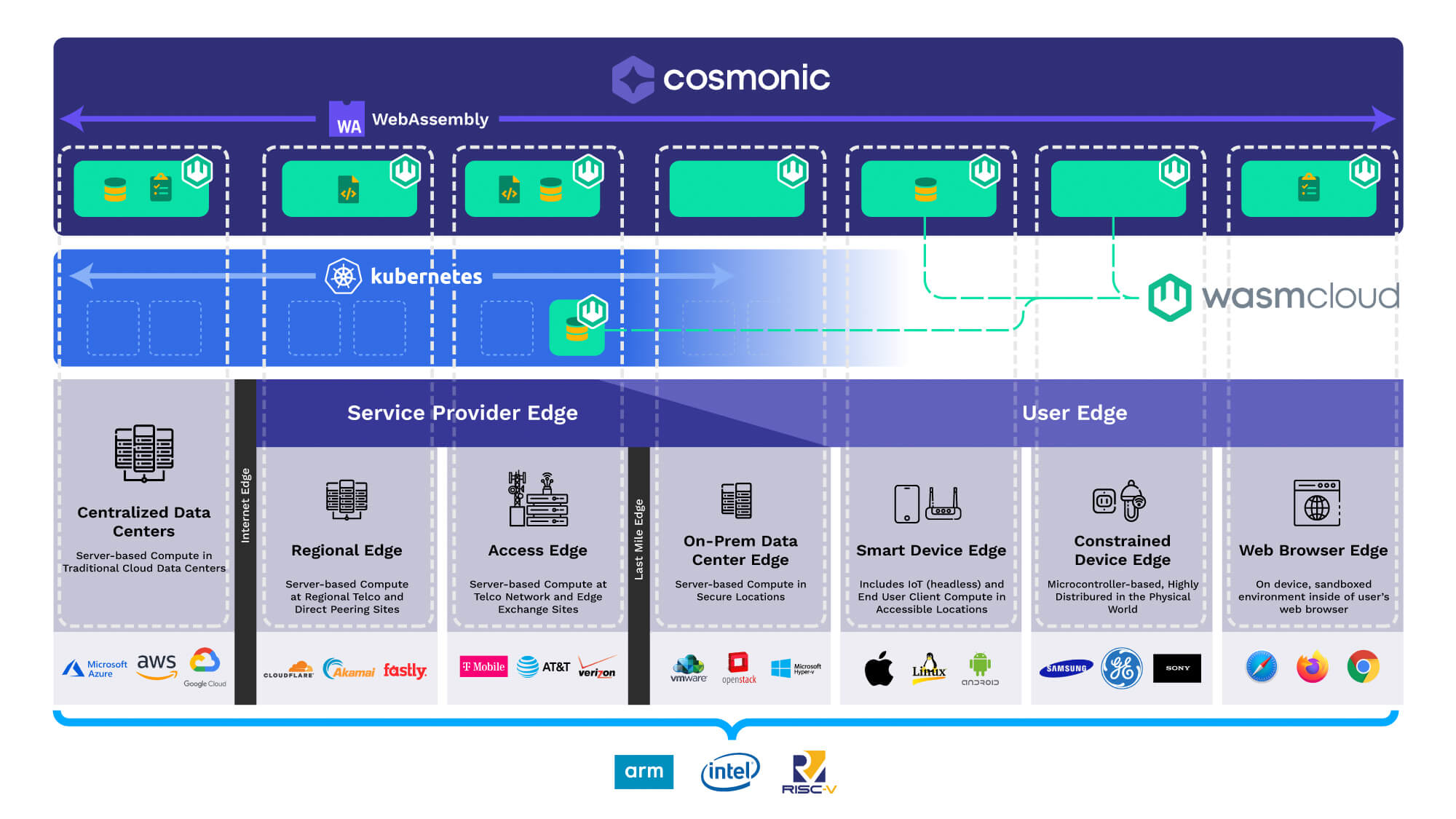

There are many different definitions of edge computing. Some see it as the "near edge," i.e. a data center positioned near a user. Sometimes it means the CDN edge. Some imagine devices at the "far edge," i.e. IoT devices or sensors in resource-constrained environments. When we think of the edge, and how it relates to WebAssembly (Wasm), we mean the device that the user is currently interacting with. That could be their smartphone, or it could be their car, or it could be a train or a plane, even the humble web browser. The "edge" means the ability to put on-demand computational intelligence in the right place, at the right time.

Figure 1. Applications in Cosmonic run on any edge from public clouds to users at the far edge.

According to NTT's 2023 Edge Report, nearly 70% of enterprises are fast-tracking edge adoption to gain a competitive edge (pun intended) or tackle critical business issues. It's easy to see why. The drive to put real-time, rewarding experiences on personal devices is matched by the need to put compute power directly into manufacturing processes and industrial appliances.

Fast-tracking edge adoption makes commercial sense. In delivering mobile-first architectures and personalized user experiences, we reduce latency and improve performance, accuracy, and productivity. Most importantly, we deliver world-class user experiences at the right time and in the right place. Fast emerging server-side standards, like WASI and the WebAssembly Component Model, allow us to deliver these outcomes in edge solutions more quickly, with more features and at a lower cost.

A path to abstraction

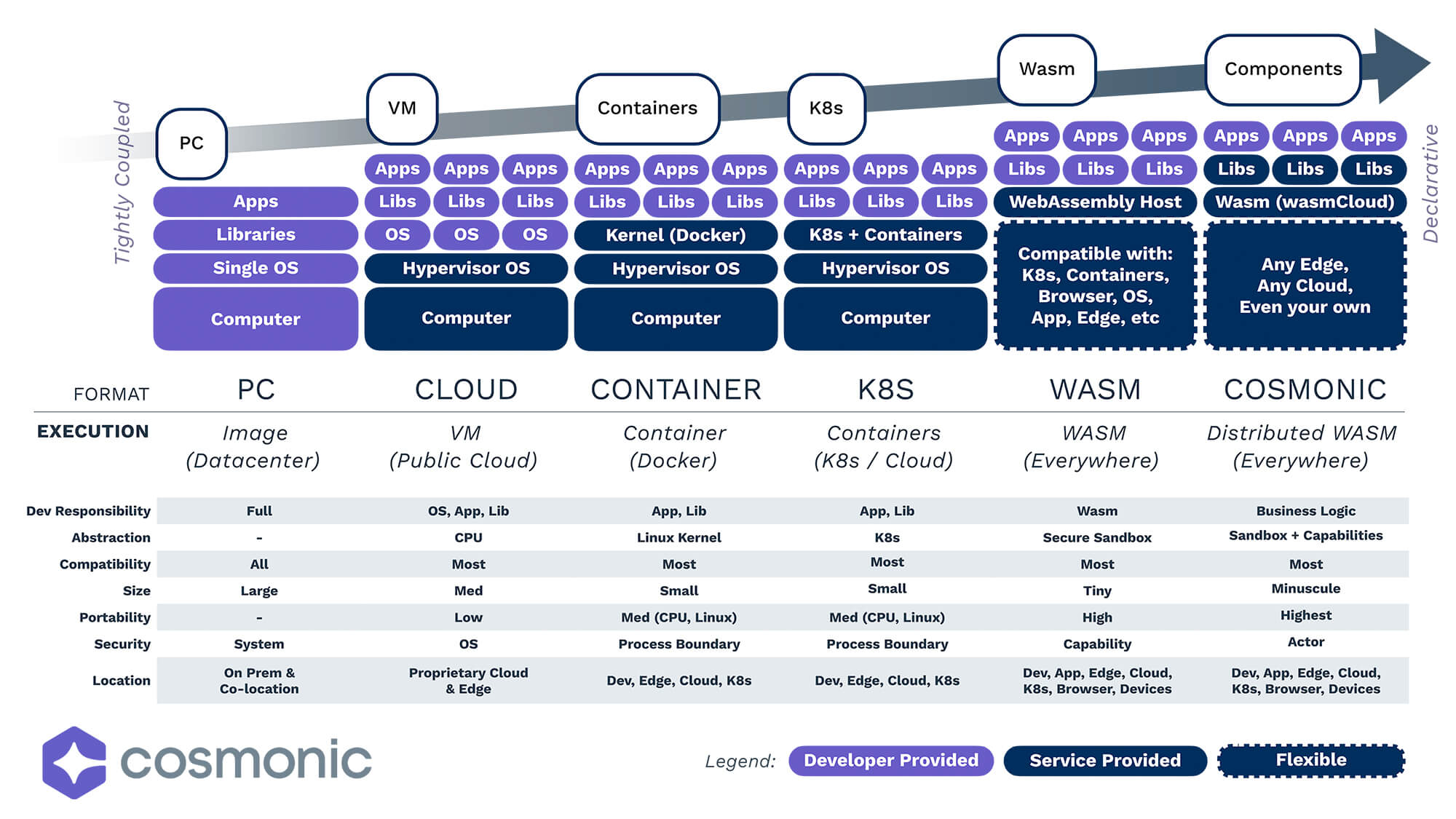

Over the last 20 years, we have made enormous progress in abstracting common complexities out of the development experience. Moving these layers to standardized platforms means that, with each wave of innovation, we have simplified the effort, reduced time-to-market, and increased the pace of innovation.

Figure 2. Epochs of technology.

VMs decoupled operating systems from specific machines, ushering in the era of the public cloud. We stopped relying on expensive data centers and the complicated processes that came with them. As the need grew to simplify the deployment and management of operating environments, containers emerged, bringing the next level of abstraction. As monolithic architectures became microservices, Kubernetes paved the way to run applications in smaller, more constrained environments.

Kubernetes is great at orchestrating large clusters of containers, at scale, across distributed systems. Its smaller, sibling orchestrators—K3s, KubeEdge, MicroK8s, MicroShift—take this a step further, allowing containers to run in smaller footprints. There are some famous use cases, such as those of the USAF and International Space Station. Can you run Kubernetes on a single device? Absolutely. Can you push it down to pretty small devices? Yes. But, as companies are finding, there is a point where the price of operating Kubernetes exceeds the value extracted.

Kubernetes challenges at the edge

In the military, we might describe the constraints of mobile architecture using the language of size, weight, and power—SWaP for short. At a certain scale, Kubernetes works well at the edge. However, what if we want to run applications on Raspberry Pis or on personal devices?

It's possible to optimize Docker containers, but even the smallest, most optimized container would sit somewhere around 100 MB to 200 MB. Standard Java-based containerized applications often run in the gigabytes of memory per instance. This might work for airplanes but, even here, Kubernetes is resource-hungry. By the time you bring everything needed to operate Kubernetes onto your jet, 30% to 35% of that device's resources are occupied just in operating the platform, never mind doing the job for which it's intended.

Devices that return to a central data center, office, or maintenance facility may have the bandwidth and downtime to perform updates. However, true "forward-deployed" mobile devices may be only intermittently connected via low bandwidth connections, or difficult to service. Capital assets like ships, buildings, and power infrastructure may be designed with a service life of 25 to 50 years — it's hard to imagine shipping complex containers full of statically compiled dependencies.

What this means is, at the far edge, on sensors and devices, Kubernetes is too large for the resource constraints, even at its lowest denominator. When bringing devices onto a ship, an airplane, a car, or a watch, the size, weight, and power constraints are too limited for Kubernetes to overcome. So companies are seeking more lightweight and agile ways to effectively deliver capabilities to these places.

Even highly optimized and minimized containers can have cold start times measured in seconds. Real-time applications (messaging apps, streaming services, and multiplayer games, for instance), i.e. those that must respond to a round trip request in less than 200 milliseconds to feel interactive, require containerized applications to be running idle before requests arrive at the data center. This cost is compounded across each application, multiplied by the number of Kubernetes regions you operate. In each region you'll have your applications pre-deployed and scaled to meet expected demand. This "lower bound problem of Kubernetes" means that even highly optimized Kubernetes deployments often operate with extensive idle capacity.

The high cost of container management

Containers bring many benefits to the technology infrastructure, but they do not solve the serious overhead of managing the applications inside those containers. The most expensive part of operating Kubernetes is the cost of maintaining and updating "boilerplate" or non-functional code — software that is statically compiled and included in each application/container at build time.

Layers of a container are combined with application binaries that, typically, include imported code, replete with vulnerabilities, frozen at compile time. Developers, hired to deliver new application features, find themselves in the role of "boilerplate farming." What this means is, to stay secure and compliant with security and regulatory guidelines, they must constantly patch the non-functional code that comprises 95% of the average application or microservice.

The issue is particularly acute at scale. Adobe recently discussed the high cost of operating Kubernetes clusters at the 2023 Cloud Native WebAssembly Day in Amsterdam. Senior Adobe engineers Colin Murphy and Sean Isom shared, "A lot of people are running Kubernetes, but when you run this kind of multi-tenant setup, while it's operationally excellent, it can be very expensive to run."

As is often the case, when organizations find common cause they collaborate together on open source software. In Adobe's case, they have joined WebAssembly platform as a service (PaaS) provider Cosmonic, BMW, and others to adopt CNCF wasmCloud. With wasmCloud, they have collaborated to build an application runtime around WebAssembly to enable them to simplify the application life cycle. With Adobe, they have leveraged wasmCloud to bring Wasm together with their multi-tenant Kubernetes estate to deliver features faster and at lower cost.

Wasm advantages at the edge

As the next major technical abstraction, Wasm aspires to address the common complexity inherent in the management of the day-to-day dependencies embedded into every application. It addresses the cost of operating applications that are distributed horizontally, across clouds and edges, to meet stringent performance and reliability requirements.

Wasm's tiny size and secure sandbox mean it can be safely executed everywhere. With a cold start time in the range of 5 to 50 microseconds, Wasm effectively solves the cold start problem. It is both compatible with Kubernetes while not being dependent upon it. Its diminutive size means it can be scaled to a significantly higher density than containers and, in many cases, it can even be performantly executed on demand with each invocation. But just how much smaller is a Wasm module compared to a micro-K8s containerized application?

An optimized Wasm module is typically around 20 KB to 30 KB in size. When compared to a Kubernetes container (usually a couple of hundred MB), the Wasm compute units we want to distribute are several orders of magnitude smaller. Their smaller size decreases load time, increases portability, and means that we can operate them even closer to users.

The WebAssembly Component Model

Applications that operate across edges often run into challenges posed by the sheer diversity of devices encountered there. Consider streaming video to edge devices. There are thousands of unique operating systems, hardware, and version combinations on which to scale your application performantly. Teams today solve this problem by building different versions of their applications for each deployment domain — one for Windows, one for Linux, one for Mac, just for x86. Common components are sometimes portable across boundaries, but they are plagued by subtle differences and vulnerabilities. It's exhausting.

The WebAssembly Component Model allows us to separate our applications into contract-driven, hot-swappable components that platforms can fulfill at runtime. Instead of statically compiling code into each binary at build time, developers, architects, or platform engineers may choose any component that matches that contract. This enables the simple migration of an application across many clouds, edges, services, or deployment environments without altering any code.

What this means is that platforms like wasmCloud can load the most recent and up-to-date versions of a component at runtime, saving the developer from costly application-by-application maintenance. Furthermore, different components can be connected at different times and locations depending on the context, current operating conditions, privacy, security, or any other combination of factors — all without modifying your original application. Developers are freed from boilerplate farming, freed up to focus on features. Enterprises can compound these savings across hundreds, even thousands, of applications.

The WebAssembly Component Model supercharges edge efforts as applications become smaller, more portable and more secure-by-default. For the first time, developers can pick and choose pieces of their application, implemented in different languages, as different value propositions, to suit more varied use cases.

You can read more about the WebAssembly Component Model in InfoWorld here.

Wasm at the consumer edge

These fast-emerging standards are already revolutionizing the way we build, deploy, operate, and maintain applications. On the consumer front, Amazon Prime Video has been working with WebAssembly for 12 months to improve the update process for more than 8,000 unique device types including TVs, PVRs, consoles, and streaming sticks. This manual process would require separate native releases for each device which would adversely affect performance. Having joined the Bytecode Alliance (the representative body for the Wasm community), the company has replaced JavaScript with Wasm within selected elements of the Prime Video app.

With Wasm, the team was able to reduce average frame times from 28 to 18 milliseconds, and worst-case frame times from 45 to 25 milliseconds. Memory utilization, too, was also improved. In his recent article, Alexandru Ene reports that the investment in Rust and WebAssembly has paid off: "After a year and 37,000 lines of Rust code, we have significantly improved performance, stability, and CPU consumption and reduced memory utilization."

Wasm at the development edge

One thing is certain... WebAssembly is already being rapidly adopted from core public clouds all the way to end user edges, and everywhere in between. The early adopters of WebAssembly — including Amazon, Disney, BMW, Shopify, and Adobe — are already demonstrating the phenomenal power and adaptability of this new stack.

For developers, early experiments hint at the benefits of bringing back-end functionality to laptops, mobiles, and remote CPUs. The big benefit of edge computing is bringing business logic as close to users or data as possible, as round-trip network latency is often what takes the most time for individual requests. Until now, exposing back-end functionality to end user devices has been difficult, expensive, and sometimes impossible, depending on the edge device architecture. Even in Kubernetes, locations must be specifically defined and configured.

With Wasm, cloud-native back ends can be distributed and run just as easily as displaying static assets to front-end users, in the same way that a CDN might operate. Because Wasm is a platform-agnostic binary format, it can run polyglot back ends in the cloud, on the edge, even in a web browser tab. As we look to the future, it won't matter which language we program in, Wasm will let us build applications that are local first and edge native.

Just as cloud computing has allowed companies to accelerate application development life cycles, WebAssembly is poised to further accelerate innovation in edge computing. By enabling cloud-native back ends to run on operational edges, Wasm allows for the distribution of business logic closer to users or data. And thanks to its platform-agnostic binary format, Wasm also transcends language barriers, empowering developers to build edge-native applications that prioritize local execution.