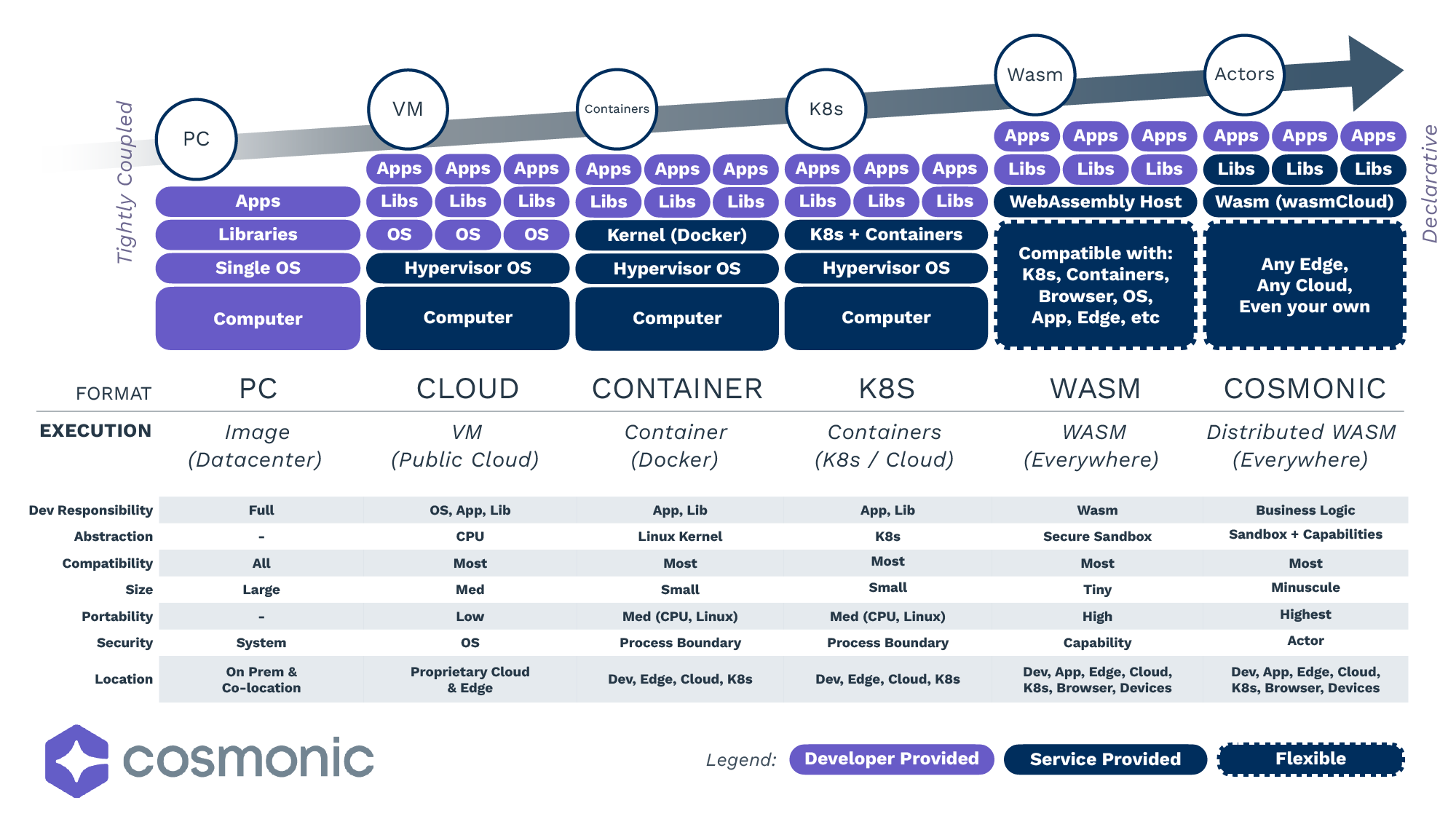

Over the last 20 years, we have made huge strides in abstracting common complexities from the lives of developers. Wave after wave of innovation has driven the technology cycle. Enterprises have organized and executed around raising the delivery abstraction targeted by their developers. With each wave, we have simplified the effort, reduced the time to deliver and hastened the pace of innovation.

Virtual machines stopped the tight coupling of individual machines to applications, paving the way for the rise of the public cloud. With the public cloud, we stopped building individual data centers (and all the complex capacity, cooling, networking, and peering concerns disappeared with them). As we accelerated into the public cloud, the need arose for us to simplify the deployment and management of operating system environments, so containers emerged. With a container, for the most part, we have a virtual Linux desktop where we can easily package and port an application with its dependencies. As containers have grown in popularity, we have adopted Kubernetes as a portable way to orchestrate them across clouds, and have stopped the tying of application orchestration to specific clouds.

However, developers today, especially enterprise developers, are still immensely frustrated by a host of common complexities. The way we build applications today still remains fraught with the challenge of negotiating boilerplate code, or non-functional requirements (NFRs), the imported libraries we use to deliver core business logic. Composed of popular web-serving libraries, database drivers, and more – a recent study by Deloitte says that developers spend up to 80% of their time managing, maintaining, and operating these NFRs.

The Problem with Containers

Containers have been essential over the last 10 years, characterized by the great lift and shift of computing stacks from on-premises environments to the cloud. As enterprises have evolved their architectures, two glaring weaknesses have emerged.

The first is that, while containers bring many benefits to the technology infrastructure, they do not solve the serious overhead of managing the applications inside those containers. Developers, hired to deliver new application features, find themselves in the role of boilerplate farming. What this means is, to stay secure and compliant with stringent regulatory guidelines, they must frequently patch the non-functional code that comprises 95% of the average application or microservice.

While enterprises try to automate as much of this complexity as possible, through common golden templates, CI/CD, and automated vulnerability remediation, developers are still in a loop on an application-by-application basis. It is frustratingly typical that development teams fix the same vulnerability in each and every unique application across hundreds of teams. For systematic vulnerabilities such as Log4j this can amount to a complete stoppage of work across every team. Worryingly, recent figures from Sysdig show 87% of container images include a high or critical vulnerability.

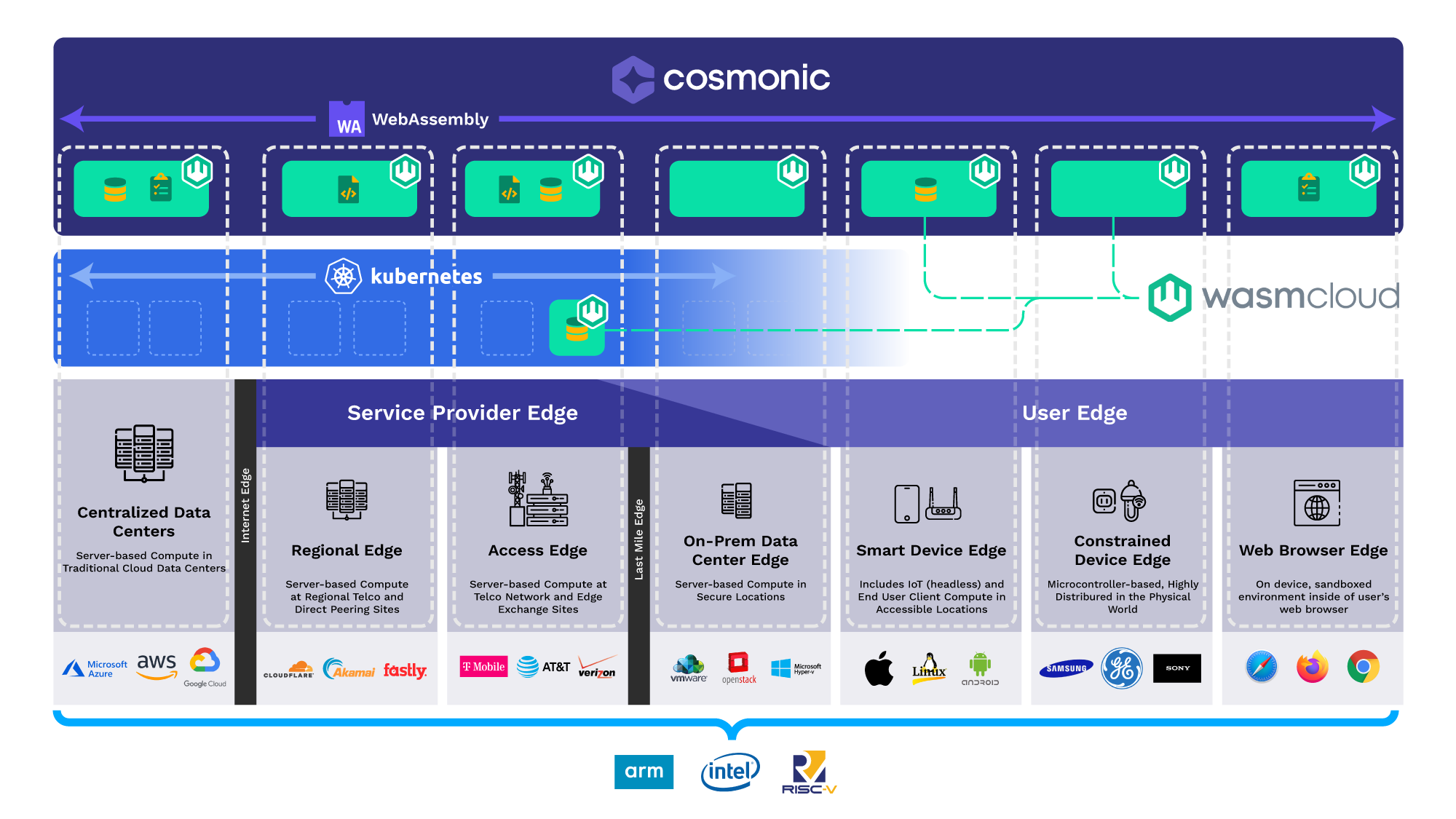

The second weakness is the high cost of operating containers in distributed Kubernetes environments. Two distinct factors drive up the cost of operating containers at scale. Firstly, for any application that matters, enterprises need their applications to meet the “-ilities of software” - reliability, availability, and more. If they don’t, businesses lose money and trust.

Secondly, applications are perceived by users as laggy if they are more than a 200 millisecond round trip time from their users. According to Google, 53% of visits are abandoned if a mobile site takes longer than 3 seconds to load.In response companies have invested heavily in horizontal expansion across many geographically diverse regions.

Given the long cold start times of containers, each region is running at least one full copy of every application in the stack. Together, the cold start problem and the “-ilities of software” represent the issues inherent in scaling Kubernetes clusters across clouds which, then, translate into incredibly high operating costs.

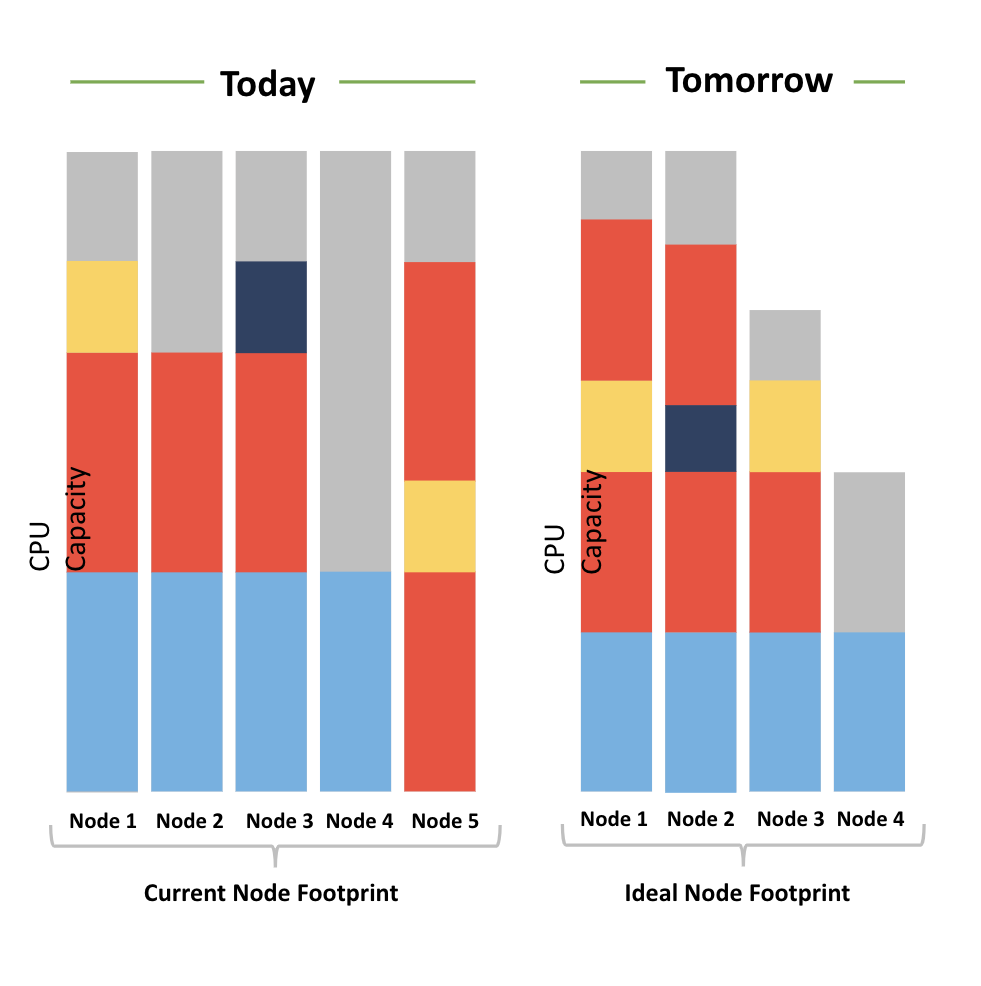

Adobe recently discussed the high cost of operating Kubernetes clusters at scale at the 2023 Cloud Native WebAssembly Day co-located at KubeCon EU. Adobe’s Colin and Sean shared: “a lot of people are running Kubernetes but, when you have run this kind of multi-tenant setup, while it’s operationally excellent, it can be very expensive to run”. In their talk, they share how they are combining CNCF wasmCloud and Kubernetes to provide a path for developers to deliver features faster but at a lower cost.

Adobe: Ideal Node Footprint, from Wasm + Kubernetes: Beyond Containers - Sean Isom, Adobe & Colin Murphy, Adobe

Beyond Containers

The next great technical abstraction then aspires to address the common complexity inherent in the management of the day-to-day application dependencies, embedded into each and every application. It could further address the cost of operating applications distributed horizontally across clouds and edges to achieve application reliability and performance.

This doesn’t necessarily portend a break with either containers or Kubernetes. Enterprises and developers have made huge investments in the security controls around the creation, inventory, operations, and diagnostic footprint of containers. Containers, for example, did not replace virtual machines – they simply became another deployment methodology. Likewise, a next-generation abstraction can harness this control surface to accelerate adoption.

As the execution backend behind edge providers Fastly Edge and Cloudflare Workers, WebAssembly seems positioned to be “what comes after containers”. Its small size, security, portability and increasing adoption are unlocking large gains for early adopters like Adobe, Amazon, Figma, and more.

WebAssembly Component Model

From the earliest draft Wasm was designed to run well in non-web use cases (see the original use-cases for Wasm from June 10, 2015). The initial primary use-case was for the web but its design was very intentional about flexibility across environments – it is now on the path to providing relief to enterprise application developers on the server side. Firstly, Wasm’s tiny size and secure sandbox mean that it may be safely executed everywhere. It is both compatible with today’s containerized Kubernetes while not being dependent upon it. Its diminutive size means it can be scaled to a significantly higher density than containers and, in many cases, it can even be performantly executed on demand with each invocation.

Wasm goes even further into relieving the pain of enterprise development – the WebAssembly Component model enables developers to think of their traditional application dependencies as a pluggable platform. Through the definition of common abstractions such as HTTP and SQL, developers can specify that their applications require these components without tightly-coupling their application to a specific component.

The flexibility of Wasm components then yields multiple benefits for enterprise developers. Firstly, they are freed from the time-consuming task of managing their dependencies on an application-by-application basis. When a WebAssembly component has a vulnerability, developers (or the platform itself) simply choose to load an updated compatible instance. Secondly, developers gain a whole new raft of portability and pluggability options for their applications. They can easily migrate from one compatible API to another, all without changing the application code.

The tectonic shift in application architecture from attaching application capabilities at runtime with WebAssembly, instead of at compile time with containers, will dramatically reduce the operational and maintenance costs incurred by today’s developers. Imagine a world where developers simply write their business logic and the platform provides the common capabilities, always ensuring that the most up to date version is present at runtime. Imagine an enterprise that only had to track and approve one copy of each library in use, instead of one copy replicated across thousands of unique development projects.

The benefits are even greater as they pave the pathway for platforms to reach further into application stacks. Modern application WebAssembly-based platforms like Cosmonic (built on CNCF wasmCloud) can accelerate innovation further by providing application capabilities on demand, automatic patch capabilities, and auto-scale functionality. By decoupling applications and their specific components, developers focus on delivering their business logic and driving innovation.

WebAssembly, the Final Abstraction

Today, we find ourselves poised at the precipice of the next great abstraction in technology. Technology is full of constant iterative improvements that positively contribute to the evolution of our application stacks. WebAssembly is not evolutionary in what it provides – it is revolutionary.

Just as virtual machines, the public cloud, and containers changed the way we organized and delivered our applications, Wasm will usher in the next generation of componentized, secure-by-default and portable applications. When WebAssembly enables our developers to focus only on delivering their business logic, it becomes not just the next great innovation, but the final abstraction.